Change Detection from Mobile Light Field Cameras

This work presents a simple, closed-form solution to change detection from moving light field cameras. This task is generally complicated by nonuniform apparent motion, but by synthesizing fixed adjacent frames we reduce the problem to that of a stationary camera.

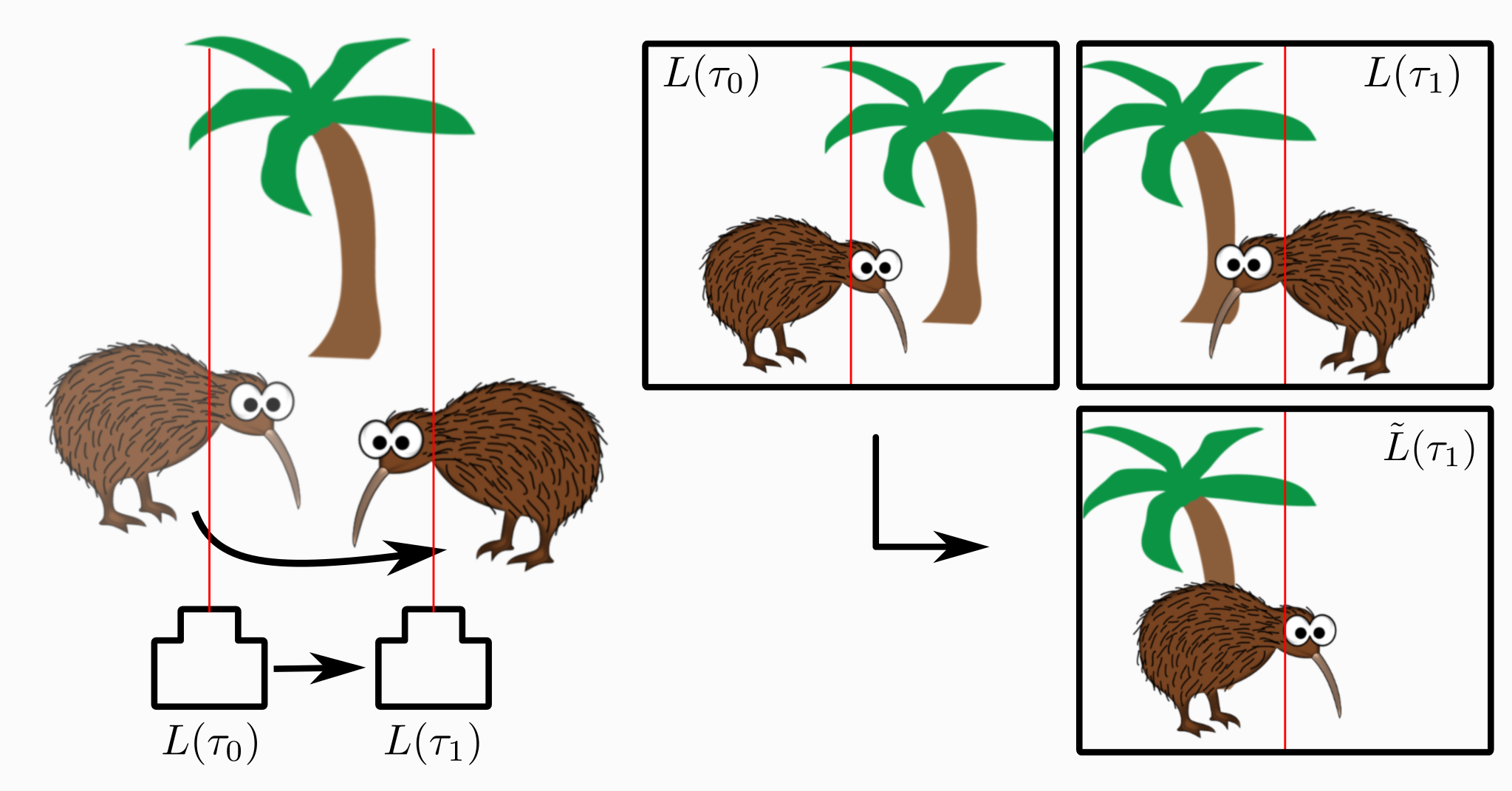

Above, camera motion between times and (left) causes apparent motion in static scene elements like the tree (top insets), making them difficult to disambiguate from genuinely dynamic elements, like the Kiwi. We render a novel view (bottom-right) showing scene content from time as seen from the point of view of the camera at . Static elements now appear static, opening a family of dynamic-camera problems to static-camera solutions. No 3D model of the scene is required, rather the geometry implicitly encoded in the light field is directly exploited. In the case of change detection, this process yields a closed-form solution.

- No explicit scene model is formed

- Closed-form, constant runtime

- Simple behaviours, failure modes

- Easily parallelizable: GPU, FPGA, etc.

- Outperforms competing single-camera methods for common scenes

- Approach generalizes to other moving-camera problems

Publications

• D. G. Dansereau, S. B. Williams, and P. I. Corke, “Simple change detection from mobile light field cameras,” Computer Vision and Image Understanding (CVIU), vol. 145C, pp. 160–171, 2016. Available here.

• D. G. Dansereau, S. B. Williams, and P. I. Corke, “Closed-form change detection from moving light field cameras,” in IROS Workshop on Alternative Sensing for Robotic Perception, 2015.

Collaborators

This work was a collaboration between Donald Dansereau and Peter Corke from the Australian Centre for Robotic Vision at QUT, and Stefan Williams from the Australian Centre for Field Robotics Marine Robotics Group, University of Sydney.

Presentations

Presentation from the 2015 IROS Workshop on Alternative Sensing for Robotic Perception

Acknowledgments

This work was supported by the Australian Centre for Field Robotics (Project DP150104440) and the Australian Research Council Centre of Excellence for Robotic Vision (Project CE140100016). Thanks to the reviewers, Dr. Linda Miller and Dr. Jürgen Leitner for their helpful suggestions.

Themes

Gallery

(click to enlarge)

A single, static light field yields six plenoptic motion components through simple closed-form expressions. The motion components describe the change in the light field as a function of changes in camera pose.

Any small camera motion is well approximated by a linear combination of these six elements. This forms the basis for closed-form change detection: dynamic scene elements are not well explained by these motion components.

A shift in perspective accomplished by adding motion components to the input light field. The virtual viewpoint has been translated towards the Lorikeet relative to the measured view.

A pair of frames from a video in which the camera moves, causing nonuniform apparent motion. A naive motion detection approach performs per-pixel differencing, highlighting much of the scene as being dynamic. The proposed closed-form method correctly singles out the dynamic elements.

State-of-the-art single-camera methods fail for projected motion parallel to apparent motion. Here the scene has a dynamic element translating to the right, but this motion is misinterpreted as disparity due to depth by the SfM-based approach, resulting in missed important changes, where the proposed method succeeds.

Additional examples showing successful highlighting of dynamic scene elements. Note the false positives for the proposed method near image edges, corresponding to scene elements entering / leaving the scene between frames.