NOCaL: Calibration-Free Odometry

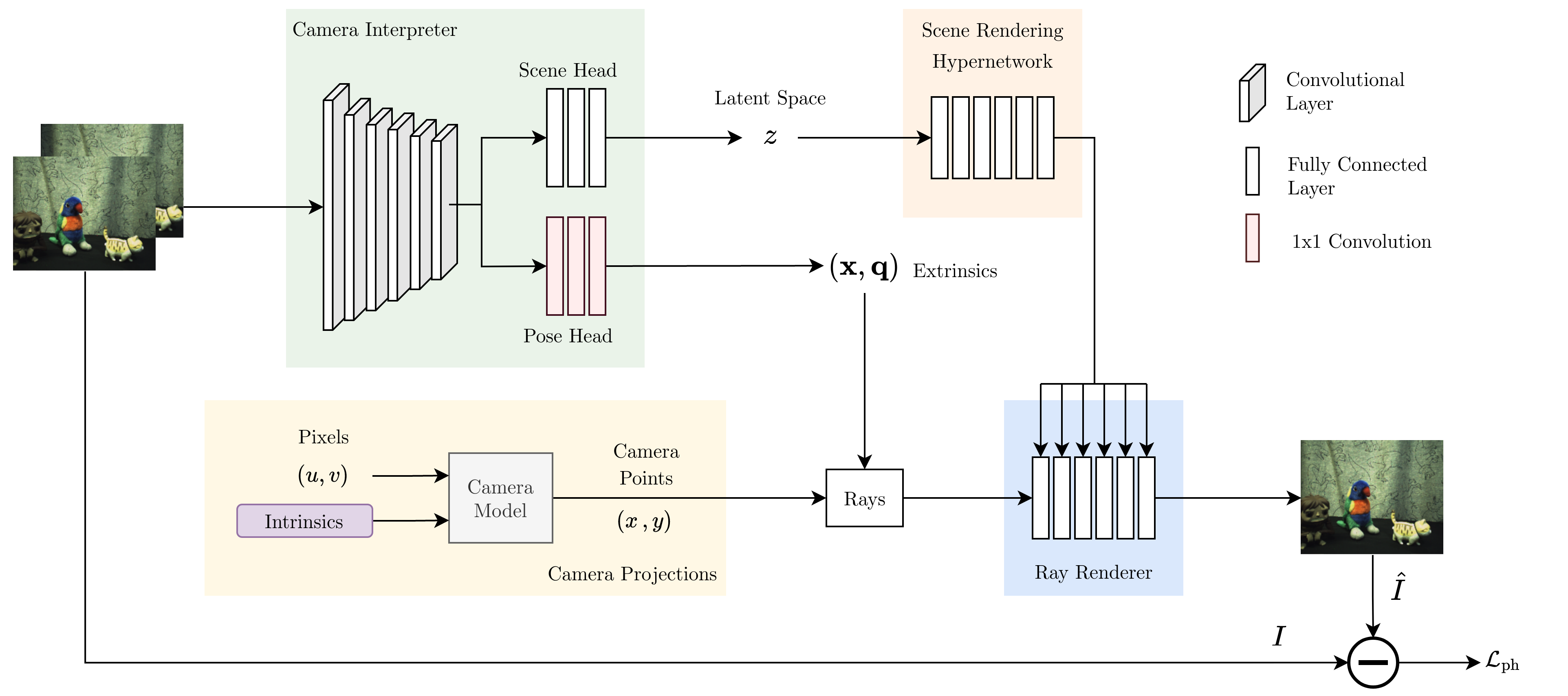

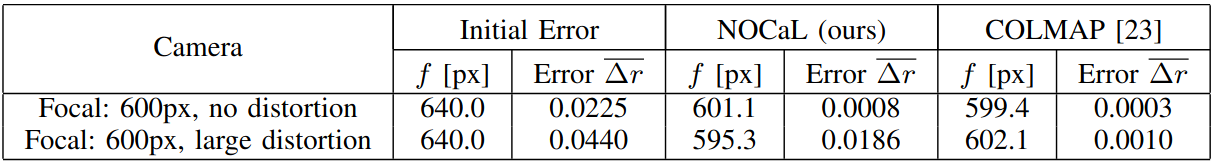

There are a multitude of emerging imaging technologies that could benefit robotics. However the need for bespoke models, calibration and low-level processing represents a key barrier to their adoption. In this work we present NOCaL, Neural Odometry and Calibration using Light fields, a semi-supervised learning architecture capable of interpreting cameras without calibration.

- NOCaL automatically interprets previously unseen cameras by jointly learning to estimate novel views, odometry, and camera parameters.

- A rendering hypernetwork is leveraged to allow us to benefit from a large pool of existing data.

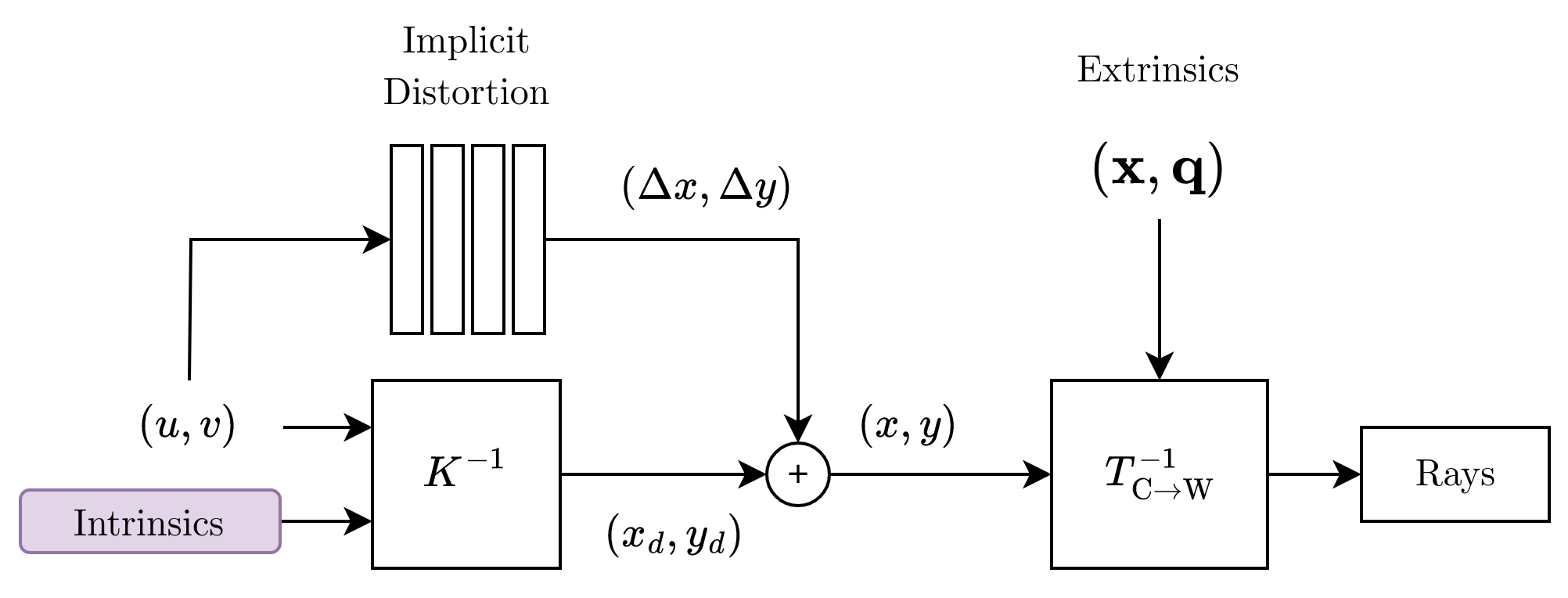

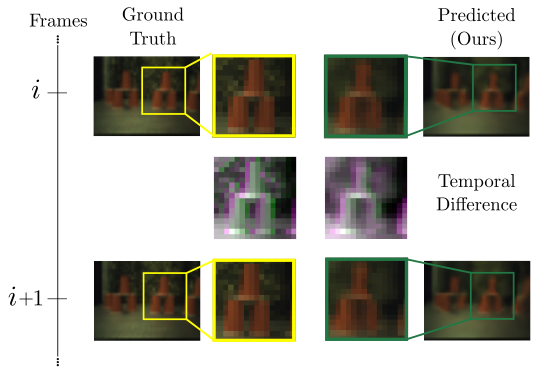

- We propose using a ray based light field rendering network for supervision of odometry, which can generates views in a camera agnostic way. This allows NOCaL to be used for multiple cameras, even ones that haven't been created yet.

This allows use of a broad range of monocular cameras without calibration. This work is a key step toward automatically interpreting more general camera geometries and emerging camera technologies.

Publications

• R. Griffiths, J. Naylor, and D. G. Dansereau, “NOCaL: Calibration-free semi-supervised learning of odometry and camera intrinsics,” Robotics and Automation (ICRA), 2023. Preprint here.

Themes

Gallery

(click to enlarge)

Citing

@article{griffiths2023nocal,

title = {{NOCaL}: Calibration-Free Semi-Supervised Learning of Odometry and Camera Intrinsics},

author = {Ryan Griffiths and Jack Naylor and Donald G. Dansereau},

journal = {Robotics and Automation ({ICRA})},

year = {2023}

}