Projects: Algorithms & Architectures

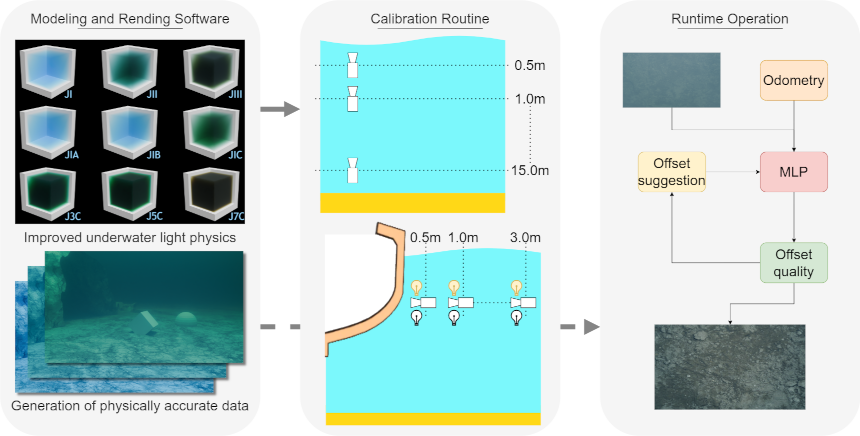

- We increase the autonomy capabilities of underwater robotic platforms.

- We improve simulation of underwater imagery within the Blender modeling software, including more accurate light behaviour in water, and models of the oceans.

- We introduce a method for in-situ water column property estimation using a monocular camera and adjustable illumination.

- We design a framework providing online guidance suggestions to maintain high-quality data collection and maximise visual coverage in a broad range of water conditions.

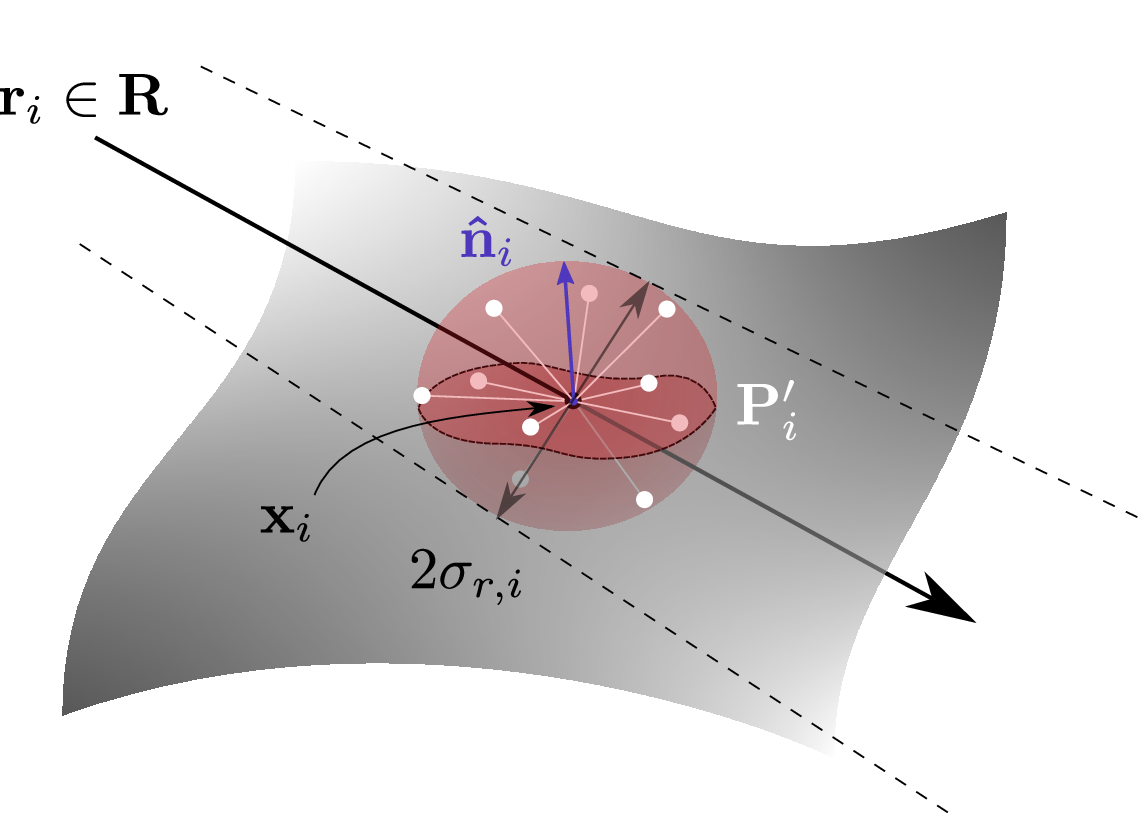

- 3D change detection with Gaussian splatting.

- Label-free and robust to viewpoint changes between trajectories.

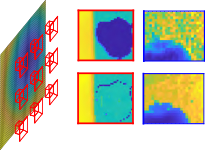

- New dataset of ten challenging scenes with structural and surface changes.

- Surface light field inspired regularisation to improve geometric fidelity of NeRF-based representations

- We propose a second sampling of the representation to regularise local appearance and geometry at surfaces in the scene

- Applicable to future NeRF based models leveraging reflection parameterisation

- An effective light field segmentation method

- Combines epipolar constraints with the rich semantics learned by the pretrained SAM 2 foundation model for cross-view mask matching

- Produces results of the same quality as the Segment Anything 2 (SAM 2) video tracking baseline, while being 7 times faster

- Can be inferenced in real-time for autonomous driving problems such as object pose tracking

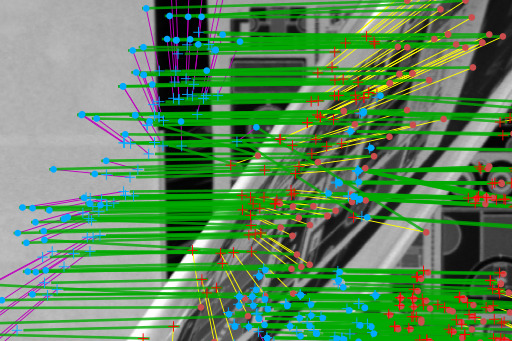

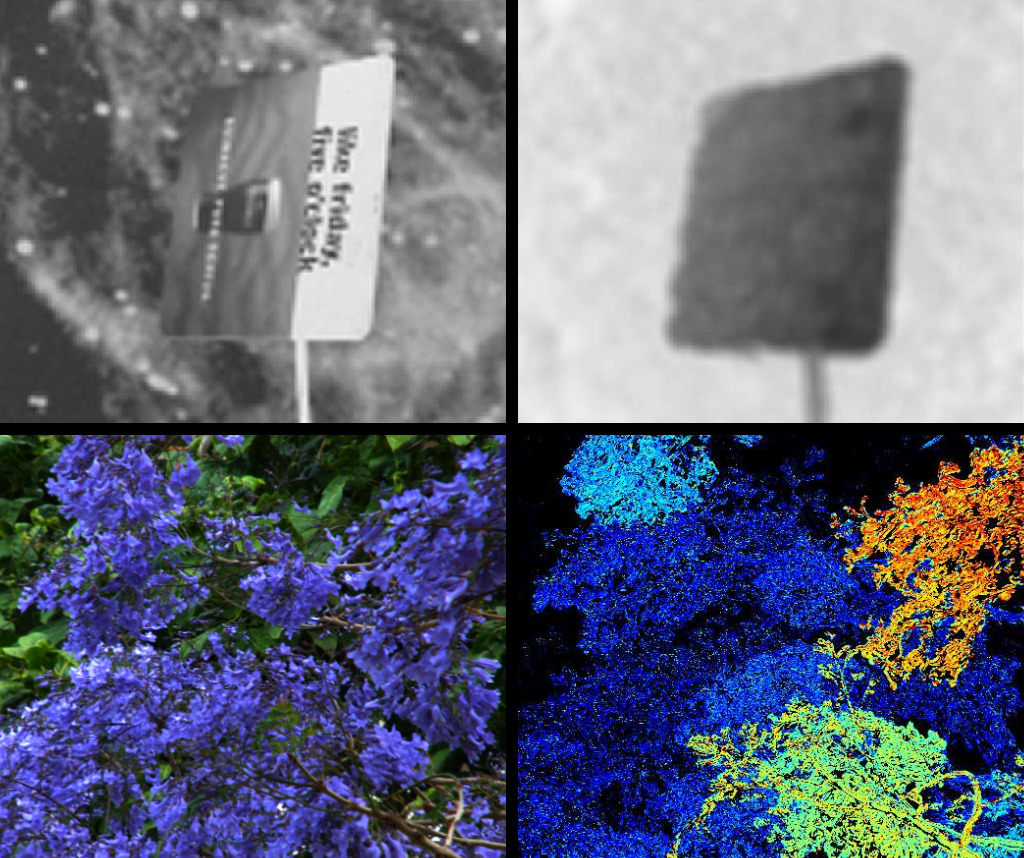

- A learned feature detector and descriptor for bursts of images

- Noise-tolerant features outperform state of the art in low light

- Enables 3D reconstruction from drone imagery in millilux conditions

- We describe the hyperbolic view dependency in Time of Flight Fields

- Our all-in-focus filter improves 3D fidelity and robustness to noise and saturation

- We release a dataset of thirteen 15 x 15 time of flight field images

- We introduce burst feature finder, a 2D + time feature detector and descriptor for 3D reconstruction

- Finding features with well defined scale and apparent motion within a burst of frames

- Approximate apparent feature motion under typical robotic platform dynamics, enabling critical refinements on hand-held burst imaging

- More accurate camera pose estimates, matches and 3D points in low-SNR scenes

- We adapt burst imaging for 3D reconstruction in low light

- Combining burst locally and feature-based methods over broad motions benefits from the strengths of each

- Allows 3D reconstructions where conventional imaging fails

- More accurate camera trajectory estimates, 3D reconstructions, and lower overall computational burden

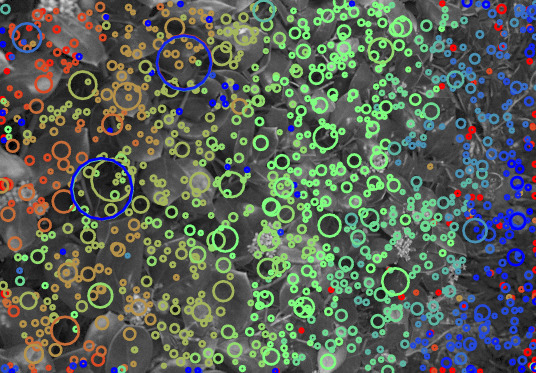

- A new kind of feature that exists in the patterns of light refracted through objects

- Allows 3D reconstructions where SIFT / LiFF fail

- More accurate camera trajectory estimates, 3D reconstructions in complex refractive scenes

- SIFT-like features for light fields

- Robust to occlusions, noise, and high-order light transport effects

- Each feature has a well-defined depth / light field slope

- Closed-form change detection from moving cameras

- Model-free handling of nonuniform apparent motion from 3D scenes

- Handles failure modes from competing single-camera methods

- Approach generalizes to simplifying other moving-camera problems

- A linear filter that focuses on a volume instead of a plane

- Enhanced imaging in low light and through murky water and particulate

- Derivation of the hypercone / hyperfan as the fundamental shape of the light field in the frequency domain

Light Field Depth-Velocity Filtering

- A 5D light field + time filter that selects for depth and velocity

- C. U. S. Edussooriya, D. G. Dansereau, L. T. Bruton, and P. Agathoklis, “Five-dimensional (5-D) depth-velocity filtering for enhancing moving objects in light field videos,” IEEE Transactions on Signal Processing (TSP), vol. 63, no. 8, pp. 2151–2163, April 2015. Available here.

Gradient-based depth estimation from light fields

- Depth from local gradients

- Simple ratio of differences, easily parallelized

- D. G. Dansereau and L. T. Bruton, “Gradient-based depth estimation from 4D light fields,” in Intl. Symposium on Circuits and Systems (ISCAS), 2004, vol. 3, pp. 549–552. Available here.

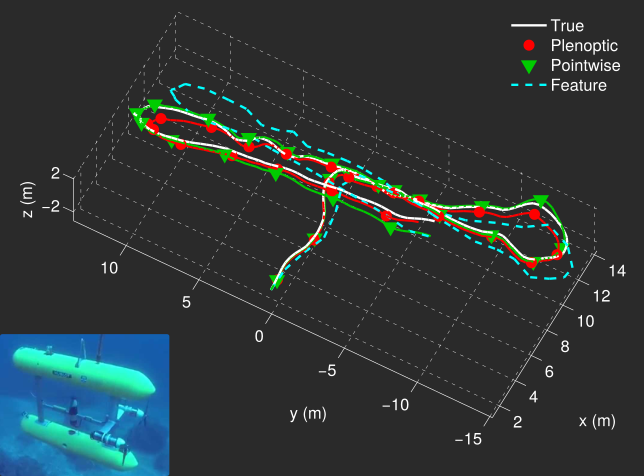

Plenoptic flow for closed-form visual odometry

- Generalization of 2D Lucas–Kanade optical flow to 4D light fields

- Links camera motion and apparent motion via first-order light field derivatives

- Solves for 6-degree-of-freedom camera motion in 3D scenes, without explicit depth models

- D. G. Dansereau, I. Mahon, O. Pizarro, and S. B. Williams, “Plenoptic flow: Closed-form visual odometry for light field cameras,” in Intelligent Robots and Systems (IROS), 2011, pp. 4455–4462. Available here.

- see also Ch.5 of D. G. Dansereau, “Plenoptic signal processing for robust vision in field robotics,” PhD thesis, Australian Centre for Field Robotics, School of Aerospace, Mechanical; Mechatronic Engineering, The University of Sydney, 2014. Available here.