Hyperbolic View Dependency for All-in-Focus Time of Flight Fields

- We discover and describe a hyperbolic view dependency in the distance values of ToF fields,

- We propose the first time of flight all-in-focus filter, exploiting this view dependency, offering enhanced noise rejection and depth accuracy compared to previous methods, and

- We augment the filter to correctly handle occlusion boundaries and reject saturation from specularities, further improving accuracy and robustness.

These improvements increase the range of conditions in which ToF measurements can be relied upon by robotic systems for higher order algorithms like grasping and human-robot interaction.

Publications

• A. Taras and D. G. Dansereau, “Hyperbolic view dependency for all-in-focus time of flight fields,” Australasian Conference on Robotics and Automation (ACRA), 2022. Available here.

Acknowledgments

Downloads

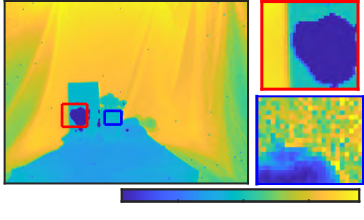

Data available for download here. The preview on the left shows the depth (top) and amplitude (bottom) channels of one of 13 scenes.

Our dataset saves both the depth and amplitude images from each view in the camera array. We provide a wide variety of scenes including: planar target, highly reflective objects (plastic boxes, shiny mannequin heads, fake fruit), occluded scenes and even challenging cases with refraction and reflection for future work.

See the dataset readme file here for further details.

Gallery

(click to enlarge)

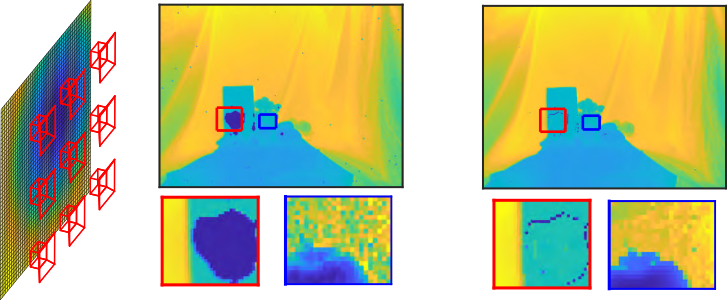

By using multiple view simultaneously, our pipeline produces higher quality depth images.

This is novel and illustrates why typical light field techniques cannot be directly applied.

Citing

@inproceedings{taras2022hyperbolic,

title = {Hyperbolic View Dependency for All-in-Focus Time of Flight Fields},

author = {Adam K. Taras and Donald G. Dansereau},

booktitle = {Australasian Conference on Robotics and Automation (ACRA)},

year = {2022},

publisher = {ARAA}

}